METAME 1.0

MetaMe 1.0 is a personal project still under development that explores the future of virtual avatars in the metaverse. Powered by Unreal Engine 5, it leverages photogrammetry, Reality Capture, facial tracking with Face Link, and Scan to MetaHuman Creator to generate hyper-realistic representations, specifically focusing on myself as the subject.

3D SCANNING

The subject was scanned using photogrammetry with Epic’s Reality Capture using soft-shaded lighting and a minimum of 20 photos to produce an accurate geometry.

Using Unreal Engine, the geometry gets imported into the MetaHuman creator, and it’s refined using its editing tools.

facial mocap

Using the Live Link app on a smartphone, we can add real-time MoCap/Face Tracking to the build, this feature can be used to transform anyone into a UE5-powered VTuber.

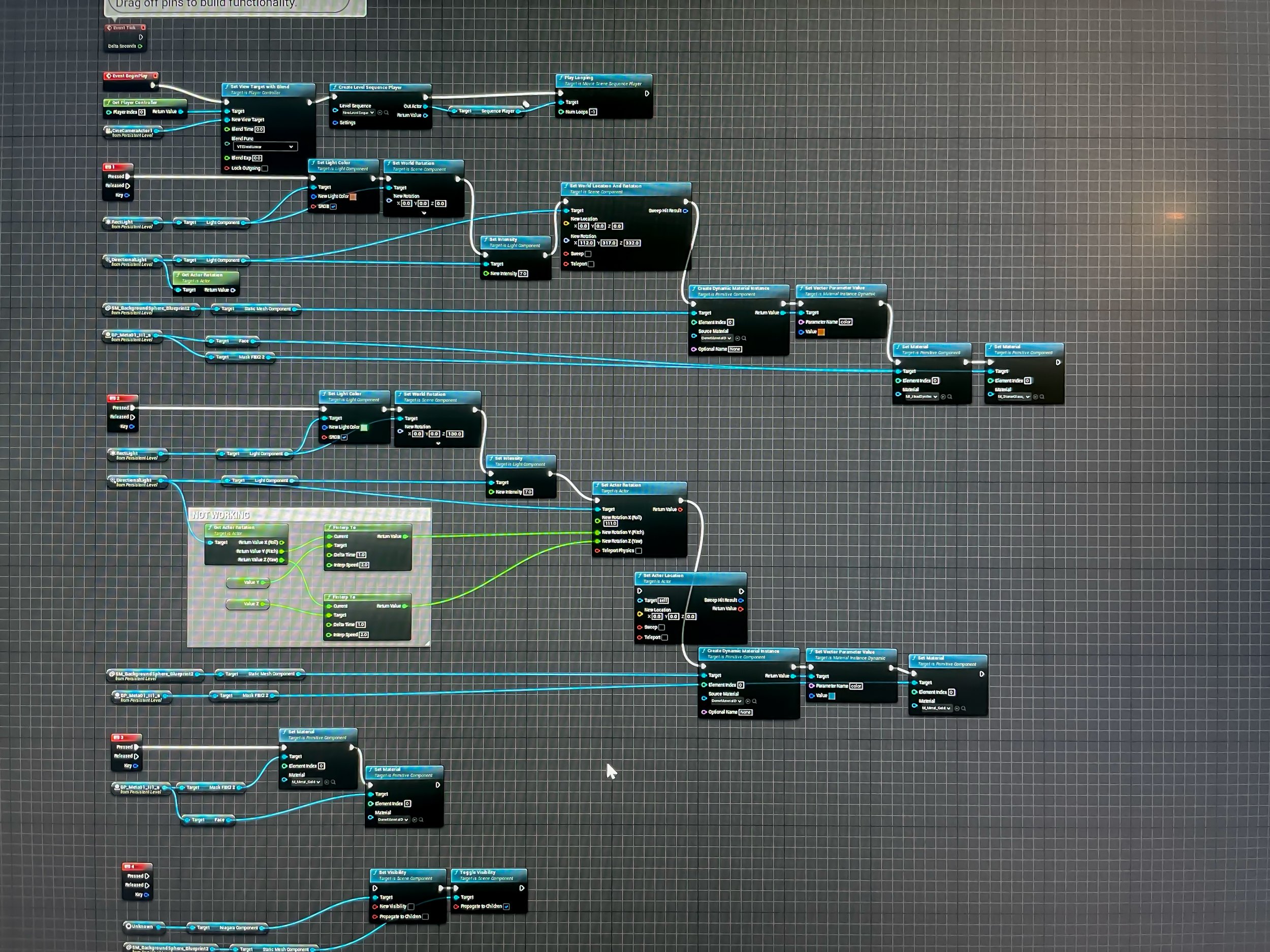

Adding Interaction

Using Unreal Blueprints, I’ve added different types of interaction allowing users to control lights, materials, motion, and cameras using their keyboard.

Emissive eye pupils were connected to Audio Synesthesia, to visualize the audio data in real time.